Important!

My tech articles—especially Linux ones—are some of the most-viewed on The Z-Issue. If this one has helped you, please consider a small donation to

The Parker Fund by using the top widget at the right. Thanks!

Whew, I know that’s a long title for a post, but I wanted to make sure that I mentioned every term so that people having the same problem could readily find the post that explains what solved it for me. For some time now (ever since the 346.x series [340.76, which was the last driver that worked for me, was released on 27 January 2015]), I have had a problem with the NVIDIA Linux Display Drivers (known as nvidia-drivers in Gentoo Linux). The problem that I’ve experienced is that the newer drivers would, upon starting an X session, immediately clock up to Performance Level 2 or 3 within PowerMizer.

Before using these newer drivers, the Performance Level would only increase when it was really required (3D rendering, HD video playback, et cetera). I probably wouldn’t have even noticed that the Performance Level was changing, except that it would cause the GPU fan to spin faster, which was noticeably louder in my office.

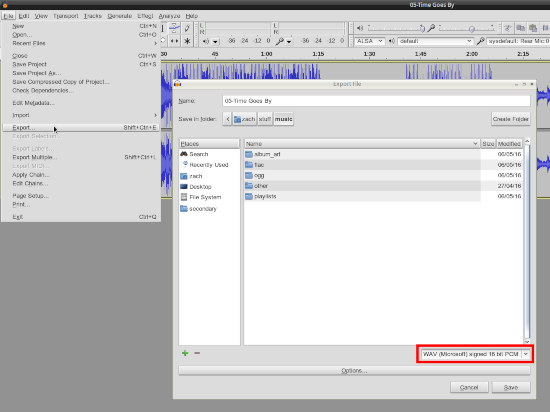

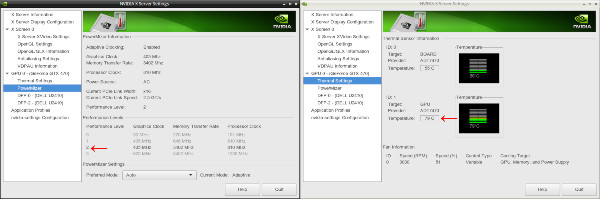

After scouring the interwebs, I found that I was not the only person to have this problem. For reference, see this article, and this one about locking to certain Performance Levels. However, I wasn’t able to find a solution for the exact problem that I was having. If you look at the screenshot below, you’ll see that the Performance Level is set at 2 which was causing the card to run quite hot (79°C) even when it wasn’t being pushed.

Click to enlarge

It turns out that I needed to add some options to my X Server Configuration. Unfortunately, I was originally making changes in /etc/X11/xorg.conf, but they weren’t being honoured. I added the following lines to /etc/X11/xorg.conf.d/20-nvidia.conf, and the changes took effect:

Section "Device"

Identifier "Device 0"

Driver "nvidia"

VendorName "NVIDIA Corporation"

BoardName "GeForce GTX 470"

Option "RegistryDwords" "PowerMizerEnable=0x1; PowerMizerDefaultAC=0x3;"

EndSection

The portion in bold (the RegistryDwords option) was what ultimately fixed the problem for me. More information about the NVIDIA drivers can be found in their README and Installation Guide, and in particular, these settings are described on the X configuration options page. The PowerMizerDefaultAC setting may seem like it is for laptops that are plugged in to AC power, but as this system was a desktop, I found that it was always seen as being “plugged in to AC power.”

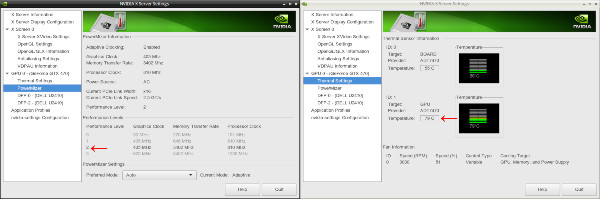

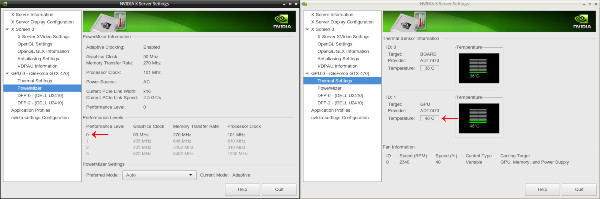

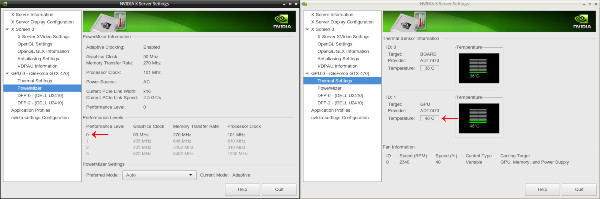

As you can see from the screenshots below, these settings did indeed fix the PowerMizer Performance Levels and subsequent temperatures for me:

Click to enlarge

Whilst I was adding X configuration options, I also noticed that Coolbits (search for “Coolbits” on that page) were supported with the Linux driver. Here’s the excerpt about Coolbits for version 364.19 of the NVIDIA Linux driver:

Option “Coolbits” “integer”

Enables various unsupported features, such as support for GPU clock manipulation in the NV-CONTROL X extension. This option accepts a bit mask of features to enable.

WARNING: this may cause system damage and void warranties. This utility can run your computer system out of the manufacturer’s design specifications, including, but not limited to: higher system voltages, above normal temperatures, excessive frequencies, and changes to BIOS that may corrupt the BIOS. Your computer’s operating system may hang and result in data loss or corrupted images. Depending on the manufacturer of your computer system, the computer system, hardware and software warranties may be voided, and you may not receive any further manufacturer support. NVIDIA does not provide customer service support for the Coolbits option. It is for these reasons that absolutely no warranty or guarantee is either express or implied. Before enabling and using, you should determine the suitability of the utility for your intended use, and you shall assume all responsibility in connection therewith.

When “2” (Bit 1) is set in the “Coolbits” option value, the NVIDIA driver will attempt to initialize SLI when using GPUs with different amounts of video memory.

When “4” (Bit 2) is set in the “Coolbits” option value, the nvidia-settings Thermal Monitor page will allow configuration of GPU fan speed, on graphics boards with programmable fan capability.

When “8” (Bit 3) is set in the “Coolbits” option value, the PowerMizer page in the nvidia-settings control panel will display a table that allows setting per-clock domain and per-performance level offsets to apply to clock values. This is allowed on certain GeForce GPUs. Not all clock domains or performance levels may be modified.

When “16” (Bit 4) is set in the “Coolbits” option value, the nvidia-settings command line interface allows setting GPU overvoltage. This is allowed on certain GeForce GPUs.

When this option is set for an X screen, it will be applied to all X screens running on the same GPU.

The default for this option is 0 (unsupported features are disabled).

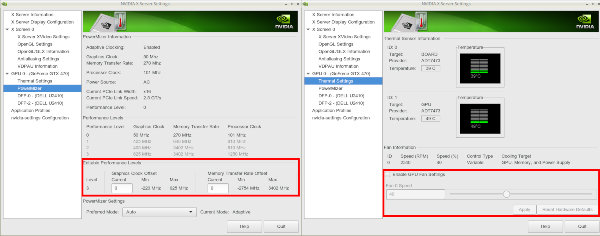

I found that I would personally like to have the options enabled by “4” and “8”, and that one can combine Coolbits by simply adding them together. For instance, the ones I wanted (“4” and “8”) added up to “12”, so that’s what I put in my configuration:

Section "Device"

Identifier "Device 0"

Driver "nvidia"

VendorName "NVIDIA Corporation"

BoardName "GeForce GTX 470"

Option "Coolbits" "12"

Option "RegistryDwords" "PowerMizerEnable=0x1; PowerMizerDefaultAC=0x3;"

EndSection

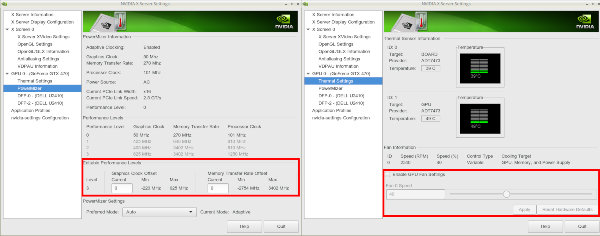

and that resulted in the following options being available within the nvidia-settings utility:

Click to enlarge

Though the Coolbits portions aren’t required to fix the problems that I was having, I find them to be helpful for maintenance tasks and configurations. I hope, if you’re having problems with the NVIDIA drivers, that these instructions help give you a better understanding of how to workaround any issues you may face. Feel free to comment if you have any questions, and we’ll see if we can work through them.

Cheers,

Zach