Important!

My tech articles—especially Linux ones—are some of the most-viewed on The Z-Issue. If this one has helped you, please consider a small donation to The Parker Fund by using the top widget at the right. Thanks!Whew, I know that’s a long title for a post, but I wanted to make sure that I mentioned every term so that people having the same problem could readily find the post that explains what solved it for me. For some time now (ever since the 346.x series [340.76, which was the last driver that worked for me, was released on 27 January 2015]), I have had a problem with the NVIDIA Linux Display Drivers (known as nvidia-drivers in Gentoo Linux). The problem that I’ve experienced is that the newer drivers would, upon starting an X session, immediately clock up to Performance Level 2 or 3 within PowerMizer.

Before using these newer drivers, the Performance Level would only increase when it was really required (3D rendering, HD video playback, et cetera). I probably wouldn’t have even noticed that the Performance Level was changing, except that it would cause the GPU fan to spin faster, which was noticeably louder in my office.

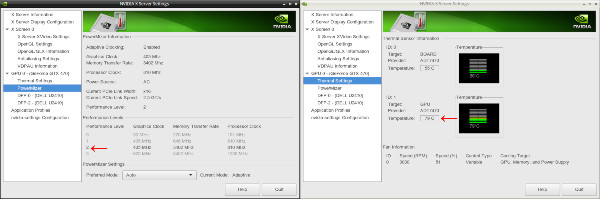

After scouring the interwebs, I found that I was not the only person to have this problem. For reference, see this article, and this one about locking to certain Performance Levels. However, I wasn’t able to find a solution for the exact problem that I was having. If you look at the screenshot below, you’ll see that the Performance Level is set at 2 which was causing the card to run quite hot (79°C) even when it wasn’t being pushed.

Click to enlarge

It turns out that I needed to add some options to my X Server Configuration. Unfortunately, I was originally making changes in /etc/X11/xorg.conf, but they weren’t being honoured. I added the following lines to /etc/X11/xorg.conf.d/20-nvidia.conf, and the changes took effect:

Section "Device"

Identifier "Device 0"

Driver "nvidia"

VendorName "NVIDIA Corporation"

BoardName "GeForce GTX 470"

Option "RegistryDwords" "PowerMizerEnable=0x1; PowerMizerDefaultAC=0x3;"

EndSection

The portion in bold (the RegistryDwords option) was what ultimately fixed the problem for me. More information about the NVIDIA drivers can be found in their README and Installation Guide, and in particular, these settings are described on the X configuration options page. The PowerMizerDefaultAC setting may seem like it is for laptops that are plugged in to AC power, but as this system was a desktop, I found that it was always seen as being “plugged in to AC power.”

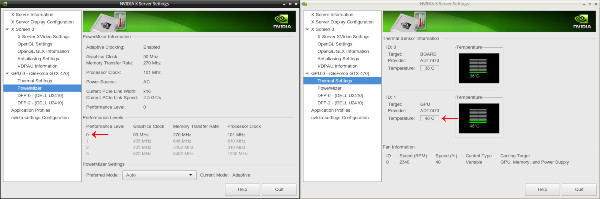

As you can see from the screenshots below, these settings did indeed fix the PowerMizer Performance Levels and subsequent temperatures for me:

Click to enlarge

Whilst I was adding X configuration options, I also noticed that Coolbits (search for “Coolbits” on that page) were supported with the Linux driver. Here’s the excerpt about Coolbits for version 364.19 of the NVIDIA Linux driver:

Option “Coolbits” “integer”

Enables various unsupported features, such as support for GPU clock manipulation in the NV-CONTROL X extension. This option accepts a bit mask of features to enable.WARNING: this may cause system damage and void warranties. This utility can run your computer system out of the manufacturer’s design specifications, including, but not limited to: higher system voltages, above normal temperatures, excessive frequencies, and changes to BIOS that may corrupt the BIOS. Your computer’s operating system may hang and result in data loss or corrupted images. Depending on the manufacturer of your computer system, the computer system, hardware and software warranties may be voided, and you may not receive any further manufacturer support. NVIDIA does not provide customer service support for the Coolbits option. It is for these reasons that absolutely no warranty or guarantee is either express or implied. Before enabling and using, you should determine the suitability of the utility for your intended use, and you shall assume all responsibility in connection therewith.

When “2” (Bit 1) is set in the “Coolbits” option value, the NVIDIA driver will attempt to initialize SLI when using GPUs with different amounts of video memory.

When “4” (Bit 2) is set in the “Coolbits” option value, the nvidia-settings Thermal Monitor page will allow configuration of GPU fan speed, on graphics boards with programmable fan capability.

When “8” (Bit 3) is set in the “Coolbits” option value, the PowerMizer page in the nvidia-settings control panel will display a table that allows setting per-clock domain and per-performance level offsets to apply to clock values. This is allowed on certain GeForce GPUs. Not all clock domains or performance levels may be modified.

When “16” (Bit 4) is set in the “Coolbits” option value, the nvidia-settings command line interface allows setting GPU overvoltage. This is allowed on certain GeForce GPUs.

When this option is set for an X screen, it will be applied to all X screens running on the same GPU.

The default for this option is 0 (unsupported features are disabled).

I found that I would personally like to have the options enabled by “4” and “8”, and that one can combine Coolbits by simply adding them together. For instance, the ones I wanted (“4” and “8”) added up to “12”, so that’s what I put in my configuration:

Section "Device"

Identifier "Device 0"

Driver "nvidia"

VendorName "NVIDIA Corporation"

BoardName "GeForce GTX 470"

Option "Coolbits" "12"

Option "RegistryDwords" "PowerMizerEnable=0x1; PowerMizerDefaultAC=0x3;"

EndSection

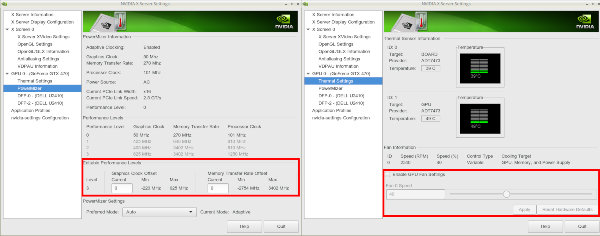

and that resulted in the following options being available within the nvidia-settings utility:

Click to enlarge

Though the Coolbits portions aren’t required to fix the problems that I was having, I find them to be helpful for maintenance tasks and configurations. I hope, if you’re having problems with the NVIDIA drivers, that these instructions help give you a better understanding of how to workaround any issues you may face. Feel free to comment if you have any questions, and we’ll see if we can work through them.

Cheers,

Zach

42 comments

Skip to comment form

Hello Zach, it’s 2024 and your post about this topic is STILL incredible useful and often referenced. Have you considered maybe taking over maintenance of the green-with-envy software?

The project is looking for new maintainers and you seem to be quite knowledgeable https://gitlab.com/leinardi/gwe/-/issues/195

Author

Hello Loki,

Thank you for bringing the project to my attention. Unfortunately, I abandoned nvidia products quite some time ago and have only used AMD and/or Intel for GPUs since.

Cheers,

Nathan Zachary

Well, this is still an issue in 2022. I’m running the 390 driver set for the Nvidia 670m and your update allows the clocks to stay low during non gaming essentially. However, I’m finding that the clocks now refuse to ramp up when I am gaming. Any way around this? I’d even be ok with writing a script that comments out the xorg lines and then starts and stops the right service preferrably without a reboot to temporarily allow the drivers to clock up again. Like stopping lightdm service or just killing the x session and restarting it? Any thoughts? Great find btw. I was so tired of having a GPU temp of 70c when browsing the web, just insane.

Author

The only way around it that I know of is to ditch nVidia. 😉 When I built my new workstation, I switched to an AMD GPU, and it’s wonderful how it “just works”. My guess is that the only way you could get it to work is to write a script using the nvidia-smi:

https://developer.download.nvidia.com/compute/DCGM/docs/nvidia-smi-367.38.pdf

Cheers,

Zach

I’ve actually solved it already. So you can comment it out but it’s better to create almost a plan of sorts which can be scripted to edit the xorg on the fly back and forth. Might be fun to build a GUI for this as well, kind of a legacy Nvidia peformance profile program. Inspired by comments here

https://forums.developer.nvidia.com/t/power-mizer-difference-between-powermizerdefault-and-powermizerlevel/46884/2

Basically, just script the change, then script a lightdm service restart and log back in to flip back and forth between high perf, adapative or low power, whatever custom mode you set etc. No need for Nvidia specific coding here, just editing the xorg and doing a service restart gets me there. Thanks for pointing me in the right direction. I always hated that powermizer ramped up when I was just browsing, but I love my nvidia cards for their performance. Even this old sob here is my go to for gamecube emulation, pseudo retro gaming from 2000’s etc. Could support open source initiatives better but I love to tinker, so no complaints really.

zach

your article helped me solving one of my problem about my nvidia drivers. However I’m using hp omen with Ubuntu distribution POP-OS 19.10 and I was having trouble controlling my fan speed manually and I found about “pwmconfig” on linux fancontrol help page but that showed me error “/usr/sbin/pwmconfig: There are no pwm-capable sensor modules installed” and i tried every possible thing i was able to find on internet than i found this “https://github.com/hbriese/fancon#usage” it surely helped me understanding some important points but still fancon is not working, so if you could help me with this it will be really helpful for me.

Author

Hello Laksh,

If you are using the nvidia proprietary driver, then pwmconfig or similar applications will probably not help you. Nvidia manages fan speeds within the driver itself, so ultimately it will depend on your particular GPU model. You may want to experiment with using the `nvidia-settings` command directly: https://wiki.archlinux.org/index.php/NVIDIA/Tips_and_tricks#Set_fan_speed_at_login

Cheers,

Zach

I got an old GTX 280, with idle temperatures 56 – 60C, clock was always on Performance Level 1 or 2 – within PowerMizer (it has 0,1,2).

Now with Your help it can go to level 0, and idle temperatures are on 46C!

THANK YOU ! (and for “colbits” explanations)

Author

Hi Jack,

I’m glad that the article helped you get the temps down. 🙂

Cheers,

Zach

I am running Ubuntu 16.04 and GPU CTX 1070 FE and trying to overclock the GPU.

I created the folder and file /etc/X11/xorg.conf.d/20-nvidia.conf as you advised, but after reboot, the system clears the file20-nvidia.conf:

regardless if I add the coolbit option xorg.conf.d/20-nvidia.conf or xorg.conf, after reboot of the system, it change it back to the original version and overclock is not an option.

I appreciate your help

Author

Hello Kam,

I don’t personally do much with Ubuntu, so I can’t say why the file would be overwritten. That shouldn’t happen in any distribution with files under

/etc/. Here are the appropriate manpages for X configuration changes within Ubuntu:http://manpages.ubuntu.com/manpages/trusty/man5/xorg.conf.5.html

Cheers,

Zach

Thanks Zach, most comprehensive view on Nvidia config I’ve found. New Nvidia 1050Ti card. Fan not seen to work. Nvidia-settings shows temp hovering green to amber, at which point the card shuts down, screen goes blank (for seconds) then comes back on?

I think I need fan control.

Fedora 26, 64 bit.

As root

nvidia-settings -q [fan:0]/GPUCurrentFanSpeed

Attribute ‘GPUCurrentFanSpeed’ (localhost.localdomain:1[fan:0]): 33.

The valid values for ‘GPUCurrentFanSpeed’ are in the range 0 – 100

(inclusive).

‘GPUCurrentFanSpeed’ is a read-only attribute.

‘GPUCurrentFanSpeed’ can use the following target types: Fan.

though I’m not sure how this helps in setting the target?

nvidia-settings –assign [fan:0]/GPUTargetFanSpeed=34 fails.

re /etc/X11/xorg.conf.d/ did you create the 20-nvidia.conf file? And why 20 please?

How to find the device ID please?

This area seems poorly documented (as far as I can tell).

Author

Hello Dave,

Glad that you found it helpful. First of all, it sounds like you may actually have a hardware problem if it is getting that hot. I can’t say with certainty, but it is a possibility. Second of all, the output that you provided indicates that, with your version of the Nvidia driver, you cannot adjust the fan speed (it says it is a read-only attribute). What version are you running? I would suggest the latest stable in your distro (but I personally don’t use Fedora). If you’re not using the latest stable in your distribution, then try 384.59 directly from Nvidia.

Regarding the

20-nvidia.conffile, yes, I created it. I named it starting with “20” because those files are read in numerical order. You don’t have to, though. For your needs, I would say that you should setCoolbitsto 12 (combination of bits 4 and 8). If you’re able to use the GUI fornvidia-settings, you’ll then see the adjustable fan speed sections.I hope that information helps.

Cheers,

Zach

Hi,

I try all kings of things but I never have OC and fan settings 🙁

I’m on Debian 9 and there’s no X11/xorg.conf.d.

I try to put conf in /etc/X11/xorg.conf and in /usr/share/X11/xorg.conf.d/20-nvidia.conf but it’s doesn’t work.

I also tried nvidia-settings -q GPUPerfModes -t which give me some infos on stdout but no possibility to OC.

If you have an idea…

Author

Hello,

If you don’t see any information about overclocking in the output of

GPUPerfModes, then those options aren’t available on your card and/or with your version of the nvidia driver. Having not used Debian 9, I’m not familiar with any distribution-specific changes to the Xorg hierarchy of configuration files. However, generally, you would want to make the changes in/etc/X11/xorg.conf.d/20-nvidia.conf.Hopefully that helps.

Cheers,

Zach

Nothing works… but I found the solution here : https://wiki.archlinux.org/index.php/NVIDIA/Tips_and_tricks

All on this page works for me. Hope it can helps someone 🙂

Author

Glad that you found something that helped! The Arch Linux wiki is a great source of information. 🙂

Cheers,

Zach

You are my hero! No fan noise anymore! Thanks a lot!

Author

Glad that the article helped you, Nikolas. 🙂

Cheers,

Zach

In case anyone is interested, your guide worked for me on Debian Stretch with Geforce 9600M GT and the 340xx (proprietary) drivers.

Thanks again!

Zach —

A fairly dumb question from a longtime Ubuntu user — I have an Nvidia GTX 960 and driver 375.39. Powermizer reads performance levels fine, but my fans are running at full tilt all the time. The above coolbits settings look like my solution, but where in Hades do I find the right .conf file?!? I’ve looked in all the places listed at https://www.x.org/archive/current/doc/man/man5/xorg.conf.5.xhtml and still not come up with xorg. conf or .conf.d. I’m sure it’s because I’m still, after 10 years of Linux, learning as I go, but it never hurts to ask!

Author

Hello Seamus,

Not a dumb question at all! Generally, on newer distributions, the configuration files for X don’t exist unless you create them on your own. X nowadays is supposed to be able to function without any additional user configuration. However, with certain hardware, or when you want to customise, you then will need to create the file(s). So, don’t worry if the file doesn’t exist. Simply use your favourite editor to create

/etc/X11/xorg.conf.d/20-nvidia.conf, restart X, and you should notice the changes take effect.Hopefully that helps, but if not, just let me know and we’ll keep trying until we get it figured out. 🙂

Cheers,

Zach

Zack,

Thanks for the suggestions; I will keep them in mind.

I discovered this thread, though, on the Ubuntu forum, chock full of people having similar issues with the Nvidia proprietary driver and PowerMizer. It’s not exactly the same presentation, but the fact that the solution is the same as what worked for mine (disable Powermizer) leads me to believe it is actually the same bug.

https://bugs.launchpad.net/ubuntu/+source/nvidia-graphics-drivers-340/+bug/1251042

Many, many people reporting the issue there… and it was closed because the original reporter found a “solution” in disabling PowerMizer, which really isn’t much of a solution at all, particularly on laptops.

Then there is this one, much older (apparently the bug goes all the way back to driver 185!)

https://bugs.launchpad.net/ubuntu/+source/nvidia-graphics-drivers/+bug/456637

That’s so old that I don’t think it would be possible to get a reasonably-working modern Linux installation with Xorg of that vintage.

It would seem that with all of these reports to Ubuntu, word would have reached upstream to Nvidia at this point, don’t you think?

Then

Author

Trust me, nVidia is aware of the problems with their Linux drivers (and especially with PowerMizer). I have had similar experiences with them via their bug tracker, in that they seldom respond, and when they do, it is less than helpful. The issue that I had was relegated to being specific to the vendor that made my graphics card, which I simply don’t buy at all. As much as I don’t want to, I may have to start using AMD graphics cards in my new host builds because they seem to have gained some serious ground in the Linux world.

Cheers,

Zach

Perhaps AMD is looking better with current models… but with old hardware like I am using, the most recent proprietary AMD driver was released several years ago, and it won’t work on anything approaching a current version of the kernel or Xorg. Nvidia’s has some bugs, but at least they are still being released for stuff this old.

I have another, possibly related, issue with PowerMizer… when I unplug the laptop, Cinnamon immediately recognizes the change (as shown in the system tray icon), but the Nvidia driver keeps right on thinking it’s on AC. Whatever it booted up with, that’s what it thinks it always is.

I decided to do a search for that, and I saw some messages in Debian’s bug tracker where people (five years ago!) had the same issue (https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=629418). They marked it as WONTFIX, saying that it’s an unsupported series (of what?)

It may seem trivial to them since they don’t encounter this particular bug in their daily use, but it’s that kind of attitude that turns people off of Linux. I can’t use even slightly older Nvidia drivers on my setup to see if they work differently without major surgery to downgrade the Xorg version (trying to uninstall and replace it with another package results in about a dozen things being removed and not replaced unless I do it manually). Meanwhile, I’m using a three year old Windows driver without any issue at all.

Still not giving up, though.

Author

You definitely have a point about the older hardware. My graphics card is quite old as well, and at least the driver works for the most part. I’ve thought about switching to an open-source driver since I essentially don’t use any of the 3D functions, but have never gotten around to it.

The biggest problem here is that the proprietary nVidia driver is a BLOB, and thus, a black box into which we can’t really see. It makes it quite difficult to troubleshoot problems without working directly with nVidia (i.e. sending logs, awaiting their responses, sending additional logs, ad nauseam).

I couldn’t agree with your more regarding the attitude turning people away from Linux. That being said, the vast majority of developers are donating their time, so the pay incentive isn’t there. It’s unfortunate, but seems to be the way of the FLOSS world.

I would stick with my original recommendation of working directly with nVidia on the bugs that you’re encountering, but can also suggest that you might want to try a different distribution as well (possibly one that has more flexibility). If you’re interested in really delving into the depths of Linux, I can provide my completely biased perspective that Gentoo would be a great choice. It will undoubtedly come with frustration as you learn the inner workings, but you will have infinitely more flexibility with regard to your Xorg and nVidia options. For instance, right now, these are the versions available for each package:

https://packages.gentoo.org/packages/x11-base/xorg-server

https://packages.gentoo.org/packages/x11-drivers/nvidia-drivers

Just food for thought.

Cheers,

Zach

Zach,

Thanks for the offer… I might just take you up on that!

I thought I had a solution here, but it turned out not to be. I tried Mint 13, and I saw that nvidia-173 was in the official repo. I tried it out and watched the nvidia-settings program as the power level dropped… and went to 0 without a problem.

I went through a whole thing trying to install that in Mint 18, then 17.3 (where it’s in the repo, but won’t install– unsolvable dependencies), then 17.2 (installs, but X server won’t start after boot), then 17.1 (installs, and X server starts after boot).

17.1 seemed like the answer, but soon I realized all was not well. Scrolling performance in Nemo and the Cinnamon system settings was terrible… like a slide-show. So was the actual slideshow shown during the login screen… the fades in and out were awful.

Even though I was able to look in the nvidia-settings and see the power state of the GPU, I realized that the nouveau driver was still the one in use, both in the driver manager and as shown by lspci. I don’t know how the driver managed to get loaded in enough to be able to show me the power level, but it wasn’t doing the rendering.

It turns out that the 173 driver doesn’t support my card at all. The Windows driver of similar number (175) does; it’s what I am using in Windows 7 on the same laptop. Bah!

After failing to get Linux restored and working well again restoring just the Linux partitions using my Windows imaging tools (both Macrium Reflect and Acronis True Image failed), I ended up restoring the entire drive image, which did work. I have yet to find a Linux backup tool that’s even close to what I am used to on Windows, and the Windows programs do work with ext4 volumes in sector by sector mode, but selectively restoration just did not work, and I am not sure why (I’ve done it several times before). Other than using up some of my SSD’s TBW rating (set artificially low by Samsung for warranty purposes), no harm done.

No good done either, though. I’ll be looking into reporting the bug (jumping through Nvidia’s hoops to do it). One thing I did think of… should I be reporting it to Nvidia or Mint? I read some stuff about this, where people were reporting bugs to the author of a program included in a given distro, but they’d already been fixed (and the distro didn’t have the new version yet). Theoretically, if Mint can’t fix it because it’s upstream, they would report it themselves… but I don’t know how likely that is.

Author

Ah, behold the lovely world of dependency resolution. That’s my biggest reason to use Gentoo is Portage typically does a wonderful job at resolving deps. I personally like source-based distributions, but I understand that they take a good amount of time due to compilations (especially of larger packages like guile, Chromium, et cetera).

As for backing up, I tend to favour

ddfor any lower-level backup needs, andrsyncfor higher-level, but they may not work for everyone. I just enjoy the flexibility. If you’re looking for something that is more like an imaging tool, then you might want to take a look at Clonezilla.As for your reporting of the nVidia problems, I would say that it certainly won’t hurt to file a bug with your distribution (Mint) as well. You’re likely correct in that nVidia probably still won’t fix the problem upstream, but it is certainly worth a shot. At the end of the day, we can only do as much as the hardware vendors allow. I hate to say that AMD is seemingly a better choice for Linux graphics cards these days, but that is how it is looking. 🙁

Cheers,

Zach

The driver version is 361.93.02. I don’t see an asterisk in the list when I ran the command you mentioned, so I am concerned I am stuck without an option to overclock.

Author

Ah, then that probably does mean that the driver does not support clock modulation for your particular card. You may want to post in the nVidia DevTalk Linux Forum just to make sure, but it would seem that the performance modes are locked on your card.

Cheers,

Zach

Sorry for the n00b question, but I am missing something obvious. I did everything you said here and have looked many other places, but the overclock offsets I am entering don’t seem to be taking effect. I don’t see any sign my GPU is actually performing at a higher level, no matter what numbers I enter.

Before I even tried to overclock, I noticed that the performance level always seems to kick down to Level 2. I think it may be that the offsets only work for level 3, but my GPU didn’t jump to level 3 before.

I have tried various settings for PowerMizer, but keep it on “Prefer Maximum Performance” most of the time. Either way, it remains in Level 2.

Perhaps it has something to do with what I’m doing with the GPU (a GTX 960). I’m running 5 simultaneous CUDA tasks, but even without tweaking any settings, the GPU temp only goes as high as 53C. I know there is a lot more overhead performance I can squeeze out.

Author

Hi Jonathan,

All questions are welcome, and I don’t think that yours would fall under the “n00b” category. 😉 It could be a regression in the version of the driver, so what version are you using? Also, try doing

nvidia-settings -q GPUPerfModes -tto make sure that the PerfModes are editable for your card. If they are not editable, then any changes will simply be disregarded.Cheers,

Zach

No, I’m not giving up on Linux! I’ve got both of my primary PCs (the two I mentioned above; the laptop and my Sandy i5 desktop) set to dual-boot Mint and Windows 7, and it works really well. I’ve got quite a bit of space allocated to the Linux partitions (currently /home, swap, and root), not just “trying it out” tiny partitions.

With Windows 10’s spying, forced updates, nutty half and half UI, advertising, Cortana, and the amount of control it steals from me and gives to Microsoft… there’s just NO way I will accept it. When Windows 7’s security updates end, I have nowhere else to go if Windows 10 doesn’t evolve into something usable by then… and given how dedicated MS is to this new direction, I seriously doubt it will.

Meanwhile, Linux keeps getting better… there are still a lot of areas where Windows is ahead (I wish Linux had something like the Device Manager, for example), but Linux is always improving, while Windows just gets worse (each new build of Win 10 seems to have even more ways that control is taken from the user and given to MS). And Linux (in Mint Cinnamon form) is pretty good as it is!

I did already post about it in the Mint forum. I posted followups to my own thread as I learned more (giving it a bump each time), but no one ever replied.

Mint is still very usable on the laptop– it’s just not as power efficient as it should be, which only really matters when on battery, which isn’t a strong point for this unit anyway. It pulls about 5 watts (measured at the wall) more when idling in Windows (where PowerMizer works well and it can go to the lowest power setting) than it does in Linux with the GPU set to “Prefer Max Performance.” It’s not a huge difference between 27w and 32w; even in Windows, this laptop is not going to go more than 2 hours on a single battery charge, and that’s if I manage to keep the GPU and CPU throttled down to idle levels, which isn’t likely if I’m actually doing anything with it (and if I’m not, why not just turn it off?).

Bumblebee is for Optimus, right? This laptop doesn’t have that… it’s on the Nvidia GPU all the time.

Author

Great! Glad to hear that you’re not letting a setback stop you. 🙂

For the “Device Manager”, what exactly are you looking for? There are a ton of tools out there that will show you the devices on your system. I, personally, prefer terminal-based applications over ones with GUIs, so I like just commands like

lspci,lsusb, and evendmidecodein certain instances. One of my favourite things about Linux is that you can configure everything to be exactly how you want it. For instance, I don’t like large desktop environments and tend to be a minimalist. So, I go with Openbox as my Window Manager, and only add the applications that I really want.You’re definitely correct regarding Bumblebee being for Optimus. I was thinking that the 220M fell into that category; my fault.

If you would ever like to discuss anything Linux-related, just let me know and we can start up an email thread. I’m glad to help in whatever ways I can, or at least point you in the right direction.

Cheers,

Zach

Thanks. I looked in the Nvidia forum, and their requirements for asking for help (which seems also to be how a bug is reported for end users like me) are beyond my meager Linux knowledge… I will have to learn how to do some things first.

In Windows, I would try older drivers and see if any of them helped for an issue like this. I’ve found that older drivers often work better than newer ones, particularly with hardware that is not the latest generation. My Kepler desktop GPU runs *horribly* in WoW using the latest 376 driver; it frequently drops to 30 or 15 fps (vsync is on, 60hz refresh), with the GPU registering nearly zero load and dropping to the lowest performance level. When I roll back to 358, I’m at a solid 60 FPS nearly always.

It seems that Nvidia only tests currently-sold products adequately. There was some controversy when the Maxwells were released and the performance of Kepler GPUs “mysteriously” dropped with the latest drivers, which some people interpreted as Nvidia trying to cripple older products to make the new ones more appealing. I don’t necessarily go that far, but the idea that they were optimized for Maxwell (and now Pascal) with little thought given to previous generations is not hard to believe.

Similarly, for the same GPU I initially posted about here, the 220M in the laptop, there’s an annoying pause for ~0.5 second that frequently appears in Plants vs. Zombies (in Windows) when using the latest 340-series driver (342.00) (the newest for this GPU). With the above paragraph’s concept in mind, I decided to seek out a driver that would have been available late in the 220M’s supported life (most likely to have been as fully optimized for that platform as a driver can be), and I came up with 277, if I recall. Sure enough, the pausing was gone in PvZ, and performance was unchanged (within the margin for error). PvZ is an old game itself, of course.

With Linux Mint 18, choosing a new driver is not an option for the laptop. I tried a few older Linux drivers from Nvidia, and they all failed to install. When I looked in the release notes, I saw that only the very latest releases of legacy Nvidia drivers (342.00 and 304.132) support X server 1.18, which Mint 18 uses. I tried 304.132, which installed just fine, but Cinnamon crashes into fallback mode after login every time with it, and the X server freezeup when the GPU settles down to the lowest performance level still happens.

So 342.00 is my only choice for driver versions, in contrast with dozens (hundreds?) of releases that will work with Windows 7. Aside from Nouveau, of course (which also runs at the highest speed all the time, as I have read).

I will figure out how to do what Nvidia wants on their forum and see what they come up with.

Thanks for trying to help, though, I appreciate it!

Author

Hi again,

I completely agree with what you said about nVidia not really testing their newer drivers for anything but the latest chipsets. I ran into that with my GeForce GTX 470, which is what started this whole post here on my blog. It’s unfortunate, but AMD is actually starting to be the leader in Linux graphics (behind Intel, which seems to “just work” most of the time). If you have trouble with the Linux portion of it, just let me know, and I’ll do what I can to help you get everything submitted to them. The truly sad part, though, is that they probably will just bounce it back to you saying it isn’t their problem. 🙁

You could, of course, either disable PowerMizer (though that’s not ideal), or even try Nouveau. I also don’t know much about this project, but I’ve read some good things about Bumblebee. Might be worth taking a look for the 220M.

Anyway, I wish you the best of luck, and I hope that you don’t shrug off Linux because of this setback. There are some frustrations, sure, but having used Linux as my only full-time OS since 1996, I couldn’t fathom going back to anything else. Also, you’ll generally find the various fora out there to be full of helpful people. You might even want to try posting in the Linux Mint forum.

Cheers,

Zach

Zach,

Thanks for the reply.

There are no editable power levels as shown by the command above, but I used Nibitor and a hex editor to do as you suggested in the BIOS, and it still crashes when it steps down into the lowest power setting, even though it was set to the same as the next one up.

Author

Darn. That would have been a decent workaround. I think that at this point, you’re simply going to have to file a bug with nVidia about the problem that you’re seeing. They will likely have you run their proprietary tool in order to submit a support bundle. The only workaround in the interim is to set the performance level statically. 🙁

Cheers,

Zach

Zach,

Your page has helped me narrow down an issue I’ve been having. The system is an Asus F8SN laptop with a Nvidia GT220M GPU.

In Windows, it works fine. In Linux Mint 18 (Cinnamon, X64), though, I am having an issue with the proprietary Nvidia driver (340.98, the latest driver for that card). The PowerMizer settings in the NVIDIA X Server Settings program show four performance levels: Lev 0, 169MHz GPU clock; Lev 1, 275 MHz; Lev 2, 400 MHz; Lev 3, 550 MHz.

When the system starts, the GPU is running in level 3 initially, then level 2, then level 1… all fine so far.

The millisecond the level drops to 0, though, the Xorg server freaks out. The mouse still moves the arrow, but the screen no longer updates (other than the mouse pointer), and no keypresses or mouse clicks do anything. Eventually, the mouse pointer stops responding to mouse inputs too. After a reboot, the Xorg log says that it’s an EQ overflow, with the log filling with hundreds of entries.

I tested this by setting the PowerMizerDefaultAC to 0x3 and PerfLevelSrc to 0x2222 in Xorg.conf, and sure enough, Linux blackscreened before I even got the password prompt on the next boot. I then set PowerMizerDefaultAC to 0x2 in recovery mode from the command line, and Linux started and runs just fine, with the Nvidia Settings program confirming that it’s locked at 275 MHz.

As soon as that performance level hits the lowest level, it falls apart.

I had previously set the performance level to maximum via the xorg.conf file as you describe (though the numbering scheme is reverse of what is displayed in the Settings program, for some reason), and it does indeed prevent the issue… but it’s not really the best choice for a laptop to have the GPU at max clock rate all the time, and I don’t really want to simply lock it to 275 MHz on battery. I’d like it to be able to ramp up as needed.

I’d love to know what causes this and fix it, but failing that, if I could just set it to adaptive mode and have it self-select all but the lowest (169 MHz) performance level, that would be a big improvement. The power difference between that and the next level up won’t be that much!

Any ideas? Thanks in advance!

Author

Hi Ascaris,

You definitely should file a bug for nVidia as that is not the intended behaviour of the performance levels. However, in the interim, I would suggest running

nvidia-settings -q GPUPerfModes -tto see if any of the performance modes are editable. If they are, a workaround would be to set the specifics of performance level 0 to match level 1. That way, you’re effectively disabling performance level 0. There is no way that I know of to actually disable a particular performance level, so this workaround may be your only option until nVidia fixes the bug.Hope that helps.

Cheers,

Zach

Yeah you’re a King !! I have the same problem since long time and you solve it ! You’re the best ! Thank’s !!!!!!

Author

Glad that the article helped you. 🙂